The early history of artificial intelligence presents a more nuanced picture than is often portrayed. While the 1956 Dartmouth workshop undoubtedly served as a formal founding event, the conceptual groundwork was already developing along multiple trajectories.

The Early Bifurcation in AI Approaches

AI research developed along two distinct paths almost from its inception:

Symbolic AI: John McCarthy, Marvin Minsky, and others advocated for systems based on formal logic, symbol manipulation, and explicit representations of knowledge. McCarthy's development of LISP in 1958 provided a programming language specifically designed for symbolic computation. I was delighted when I discovered LISP in the mid 80s and realised that with LISP you can write programs that modify itself or write other programs. This property made LISP especially suitable for AI research, as it enabled systems to analyse and generate their own code.

Connectionist Approaches: Frank Rosenblatt's perceptron (1957) represented a fundamentally different vision—machines that could learn from data without explicit programming of rules. His work drew inspiration from neural structures, aiming to create systems that learned through experience rather than explicit programming.

Both approaches coexisted during AI's earliest years, though their relationship was complex.

The Dartmouth workshop included participants with varying perspectives. While McCarthy and Minsky would become strongly associated with symbolic approaches, others like Solomonoff and Arthur Samuel (whose checkers program learned through experience) explored more statistical and learning-based methods.

Claude Shannon, the founder of Information Theory, another Dartmouth attendee, had interests spanning both formal logic and statistical approaches to information.

The involvement of neurologists and psychiatrists (like Warren McCulloch and Walter Pitts, whose 1943 paper proposed a computational model of neural networks) highlights the neurological inspiration present from the beginning.

Frank Rosenblatt was an American psychologist who wrote in 1958 “Stories about the creation of machines having human qualities have long been a fascinating province in the realm of science fiction…”, he continues “Yet we are about to witness the birth of such a machine – a machine capable of perceiving, recognising and identifying its surroundings without any human training or control.”

Rosenblatt's ambitious 1958 statement reveals that learning-based approaches were not mere sidelines but represented a parallel vision for AI's development. His perceptron generated significant excitement precisely because it offered a different path to machine intelligence than purely symbolic approaches.

I discovered his work in the late 80s and was surprised that I never heard before about such a significant work.

Ray Solomonoff: A Bridge Between Paradigms

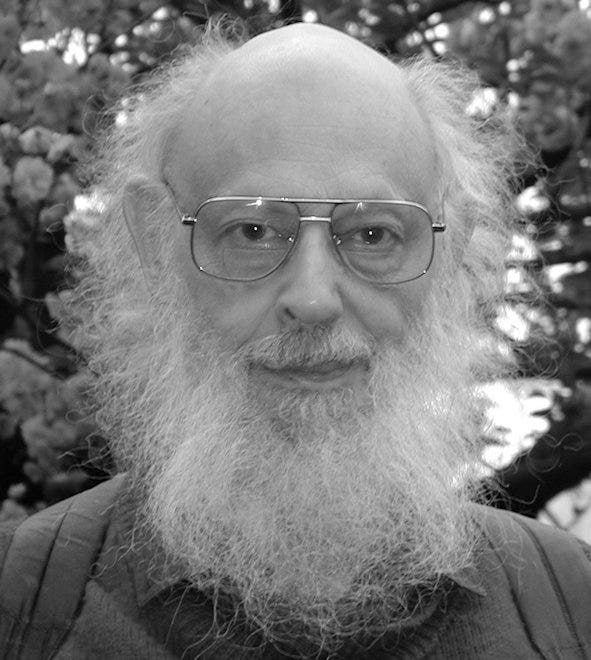

Ray Solomonoff (1926-2009) occupies a unique position in early AI history. Though present at the Dartmouth workshop, his contributions don't fit neatly into either the symbolic or connectionist camps. Solomonoff's work on algorithmic probability and inductive inference laid theoretical foundations for machine learning before "machine learning" existed as a term.

His 1964 paper "A Formal Theory of Inductive Inference" established principles that would later influence both symbolic reasoning systems and statistical learning approaches. Solomonoff's universal prior distribution concept addressed the fundamental question of how machines could learn from experience and generalise from data—concerns central to both AI paradigms.

I became familiar with Solomonoff’s work during my PhD. I was immediately taken aback by the depth and significance of his work on algorithmic information theory, which explores the relationship between the complexity of data and the shortest programs that can generate it, leading to concepts like algorithmic probability and randomness.

Solomonoff's universal prior distribution, (often called the "Solomonoff prior") provided a mathematical formalisation of Occam's razor, probably my favourite scientific principle, by assigning higher probability to simpler hypotheses. This fundamental idea directly influenced modern Bayesian methods that incorporate complexity penalties, such as: Bayesian Information Criterion and Bayesian Nonparametric Models.

The Historical Context

This dual development occurred within a specific historical context:

Computing resources were extremely limited, favouring more abstract, rule-based approaches that could function within tight constraints.

The cognitive revolution in psychology was underway, emphasising mental processes as computational.

Cold War funding priorities shaped research directions, with symbolic approaches often receiving more substantial support in the early years.

Rather than seeing AI history as a linear progression from symbolic approaches to connectionist ones, it's more accurate to recognise that both paradigms were present from the beginning, with their relative prominence shifting over time due to theoretical developments, available technology, and changing research priorities.

Additional Research and References

Primary references:

Russell, S., & Norvig, P. (2021). Artificial Intelligence: A Modern Approach (4th ed.). Pearson.

Solomonoff, R. J. (1964). "A Formal Theory of Inductive Inference." Information and Control, 7(1), 1-22.

McCarthy, J. (1960). "Recursive functions of symbolic expressions and their computation by machine, Part I." Communications of the ACM, 3(4), 184-195.

Rosenblatt, F. (1958). "The perceptron: A probabilistic model for information storage and organization in the brain." Psychological Review, 65(6), 386-408.

LinkMcCulloch, W.S. & Pitts, W. (1943). "A logical calculus of the ideas immanent in nervous activity." Bulletin of Mathematical Biophysics, 5, 115-133.

Orbanz, P., Teh, Y.W. (2017). Bayesian Nonparametric Models. In: Sammut, C., Webb, G.I. (eds) Encyclopedia of Machine Learning and Data Mining. Springer, Boston, MA.

Secondary references for historical context:

Crevier, D. (1993). AI: The Tumultuous History of the Search for Artificial Intelligence. Basic Books.

Nilsson, N. J. (2010). The Quest for Artificial Intelligence: A History of Ideas and Achievements. Cambridge University Press.